Nonidentifiable models¶

[1]:

import warnings

import numpy as np

import pandas as pd

import numba

import scipy.optimize

import scipy.stats as st

import bokeh_catplot

import bebi103

import holoviews as hv

hv.extension('bokeh')

import bokeh.io

import bokeh.plotting

bokeh.io.output_notebook()

In this lesson, we will show how nonidentifiability can be lurking in an analysis.

The theoretical model¶

A human immunodeficiency virus (HIV) is a virus that causes host organisms to develop a weaker, and sometimes ineffective, immune system. HIV inserts its viral RNA into a target cell of the immune system. This virus gets reverse transcribed into DNA and integrated into the host cell’s chromosomes. The host cell will then transcribe the integrated DNA into mRNA for the production of viral proteins and produce new HIV. Newly created viruses then exit the cell by budding off from the host. HIV carries the danger of ongoing replication and cell infection without termination or immunological control. Because CD4 T cells, which communicate with other immune cells to activate responses to foreign pathogens, are the targets for viral infection and production, their infection leads to reductions in healthy CD4 T cell production, causing the immune system to weaken. This reduction in the immune response becomes particularly concerning after remaining infected for longer periods of time, leading to acquired immune deficiency syndrome (AIDS).

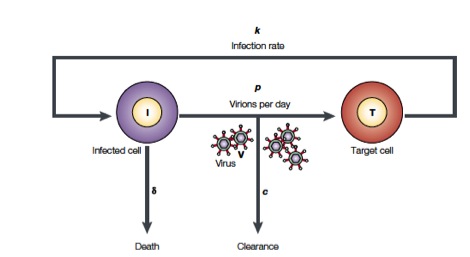

Perelson and coworkers have developed mathematical models to study HIV populations in the eukaryotic organisms. HIV-1 infects cells at a rate \(k\) and is produced from these infected T cells at a rate \(p\). On the other hand, the viruses are lost due to clearance by the immune system of drugs, which occurs at a rate \(c\), and infected cells die at a rate \(\delta\) (Figure from Perelson, Nat. Rev. Immunol., 2, 28-36, 2002)

The above process can be written down as a system of differential equations.

\begin{align} \frac{dT^*}{dt} &= k V_I T - \delta T^*\\[1em] \frac{dV_I}{dt} &= -cV_I\\[1em] \frac{dV_{NI}}{dt} &= N \delta T^{*} - c V_{NI}, \end{align}

Here, \(T(t)\) is the number of uninfected T-cells at time \(t\), and \(T^*\) is the number of infected T cells. Furthermore, there is a concentration \(V_I(t)\) of infectious viruses that infect T cells at the rate \(k\). We also have a concentration \(V_{NI}\) of innocuous viruses. We define \(N(t)\) to be the number of newly produced viruses from one infected cell over this cell’s lifetime. We can measure the total viral load, \(V(t) = V_I(t) + V_{NI}(t)\). If we initially have a viral load of \(V(0) = V_0\), we can solve the system of differential equations to give

\begin{align} V(t;V_0,c,\delta) = V_0e^{-ct} + \frac{cV_0}{c-\delta}\left[\frac{c}{c-\delta}(e^{-{\delta}t} - e^{-ct}) - {\delta}te^{-ct}\right]. \end{align}

The data set¶

We will take viral load data from a real patient (which you can download here). The patient was treated with the drug Ritonavir, a protease inhibitor that serves to clear the viruses; i.e., it modulates \(c\). So, in the context of the model, \(c\) is a good parameter to use to understand the efficacy of a drug.

Let us take a quick look at the data set.

[2]:

df = pd.read_csv("../data/hiv_data.csv", comment="#")

p = bokeh.plotting.figure(

frame_height=250,

frame_width=450,

x_axis_label="days after drug administration",

y_axis_label="RNA copies per mL",

)

p.circle(df["Days after administration"], df["RNA copies per mL"])

bokeh.io.show(p)

After some noisy viral RNA levels early on, the virus begins to be cleared after about a day, bringing the viral load down.

The statistical model¶

For our statistical model (which we will later see is nonidentifiable), we will assume that residual \(i\) is Normally distributed with scale parameter \(\sigma_i = \sigma_0 V(t_i;V_0, v, \delta)\). That is, we assume that the variability scales with the size of the measurement.

\begin{align} &\mu_i = V(t_i;V_0,c,\delta) = V_0e^{-ct_i} + \frac{cV_0}{c-\delta}\left[\frac{c}{c-\delta}(e^{-{\delta}t_i} - e^{-ct_i}) - {\delta}t_ie^{-ct_i}\right] \;\forall i, \\[1em] &V_i \sim \text{Norm}(\mu_i, \sigma_0 \mu_i) \;\forall i. \end{align}

Performing a regression to determine the parameters δ and c¶

The most common procedure to characterize the parameters δ and c is to minimize the sum of the square of the residuals between the theoretical equation given above and the measured data. As we showed in one of the homeworks, this is equivalent to finding the MAP parameter values for a model with a homoscedastic Gaussian likelihood and Uniform priors. Let’s proceed to do this MAP calculation.

In the viral load model, we need to make sure that we don’t get a divide by zero error when \(c \approx \delta\). We will use the fact that

\begin{align} \lim_{c\to\delta} V(t;V_0,c,\delta) = V_0 \left(1 + \delta t + \frac{\delta^2 t^2}{2}\right)\mathrm{e}^{-\delta t}. \end{align}

We can now write our viral load model. Because we will be computing the posterior on a grid for plotting later, I am going to use Numba to speed things up.

[3]:

@numba.jit(nopython=True)

def viral_load_model(p, t):

"""Perelman model for viral load"""

# Unpack parameters

V_0, c, delta = p

# If c and d are close, use limiting expression

if abs(c - delta) < 1e-9:

return V_0 * (1 + delta * t + (delta * t)**2 / 2) * np.exp(-delta * t)

# Proceed with typical calculation

bracket_term = ( c / (c - delta) * (np.exp(-delta * t) - np.exp(-c * t))

- delta * t * np.exp(-c * t) )

return V_0 * (np.exp(-c * t) + c / (c - delta) * bracket_term)

I will now code up the statistical model. I will not use scipy.stats, opting for hand-coding the Normal log PDF by hand for speed.

[4]:

@numba.jit(nopython=True)

def resid(p, t, V):

"""Residuals for viral load model with log parameters."""

return V - viral_load_model(p, t)

def log_likelihood(p, t, V):

"""Log likelihood for viral load model Gaussian."""

if (p < 0).any():

return -np.inf

V0, c, delta, sigma_0 = p

V_theor = viral_load_model(p[:-1], t)

sigma = sigma_0 * V_theor

return st.norm.logpdf(V, V_theor, sigma).sum()

Based on the plot of the data, we will guess \(V_0 \approx 150000\) RNA copies/mL. We’ll guess that \(c\) is dominating the drop in load and choose \(c \approx 3\) days\(^{-1}\). We’ll guess \(\delta\) is slower, say 0.3 day\(^{-1}\). Now, we’ll perform the curve fit.

[5]:

# Unpack Numpy arrays for speed

t = df['Days after administration'].values

V = df['RNA copies per mL'].values

def mle(data):

"""Compute MLE for HIV model."""

# Unpack

t = data[:, 0]

V = data[:, 1]

# Initial guess

p0 = np.array([150000, 3, 0.3, 0.3])

# Find MLE

with warnings.catch_warnings():

warnings.simplefilter("ignore")

res = scipy.optimize.minimize(

fun=lambda p, t, V: -log_likelihood(p, t, V),

x0=p0,

args=(t, V),

method='powell'

)

if res.success:

return res.x

else:

raise RuntimeError('Convergence failed with message', res.message)

popt = mle(df[['Days after administration', 'RNA copies per mL']].values)

# Print results to screen

print("""MLEs:

V_0 = {0:.2e} copies of RNA/mL

c = {1:.2f} 1/days

δ = {2:.2f} 1/days

σ0 = {3:.2f}

""".format(*popt))

MLEs:

V_0 = 1.32e+05 copies of RNA/mL

c = 4.69 1/days

δ = 0.44 1/days

σ0 = 0.21

Now, we’ll plot the regression line with the data. This is what is commonly done, and indeed was done in the Perelson paper, though we will momentarily do a graphical model check, which is a better way to construct the plot.

[6]:

# Plot the regression line

t_smooth = np.linspace(0, 8, 200)

p.line(t_smooth, viral_load_model(popt[:-1], t_smooth),

color='orange', line_width=2)

bokeh.io.show(p)

Graphical model check¶

We’ll now perform a quick graphical model check, using our generative model parametrized by the MLE to generate data sets. First, we’ll code up a function to generate samples and then generate them.

[7]:

rg = np.random.default_rng()

def sample_hiv(p, t, size=1, rg=rg):

"""Generate samples of of HIV viral load curves"""

samples = np.empty((size, len(t)))

for i in range(size):

mu = viral_load_model(p[:-1], t)

samples[i] = np.maximum(0, rg.normal(mu, p[-1]*mu))

return samples

t_theor = np.linspace(0, 8, 200)

samples = sample_hiv(popt, t_theor, size=5000)

And now we can build the plot.

[8]:

p = bebi103.viz.predictive_regression(

samples=samples,

samples_x=t_theor,

data=df[['Days after administration', 'RNA copies per mL']].values,

x_axis_label='days after drug administraion',

y_axis_label='RNA copies per mL',

percentiles=[30, 60, 90],

)

bokeh.io.show(p)

This looks ok, with most measured points falling within middle 90th percentile of he generated data sets.

Confidence intervals of parameters¶

We can proceed to compute confidence intervals for the parameters. We will use our usual method of parametrically drawing new data sets out of the generative distribution parametrized by the MLE. WE first have to write a function to generate new data sets out of the model with the requisite call signature for bebi103.draw_bs_reps_mle().

[9]:

def gen_hiv(p, t, size, rg):

return np.vstack((t, sample_hiv(p, t, size=1, rg=rg))).transpose()

bs_reps = bebi103.draw_bs_reps_mle(

mle,

gen_hiv,

data=df[["Days after administration", "RNA copies per mL"]].values,

mle_args=(),

gen_args=(t,),

size=5000,

n_jobs=3,

progress_bar=True,

)

# Compute confidence intervals and take a look

pd.DataFrame(

data=np.percentile(bs_reps, [2.5, 97.5], axis=0),

columns=["V0*", "c*", "δ*", "σ0*"],

index=["low", "high"],

)

100%|██████████| 1666/1666 [02:42<00:00, 10.24it/s]

100%|██████████| 1668/1668 [02:43<00:00, 10.22it/s]

100%|██████████| 1666/1666 [02:43<00:00, 10.20it/s]

[9]:

| V0* | c* | δ* | σ0* | |

|---|---|---|---|---|

| low | 110162.584060 | 1.595178e+00 | 0.389491 | 0.116078 |

| high | 154764.727819 | 2.499524e+12 | 0.545427 | 0.259767 |

All parameters look like they have reasonable confidence intervals for their MLE except for \(c^*\). There is something funky, here, with the end of the confidence interval being \(10^{12}\) days\(^{-1}\)! We can take a quick look at the ECDF of our samples of \(c^*\).

[10]:

df_res = pd.DataFrame(data=bs_reps, columns=['V0*', 'c*', 'δ*', 'σ0*'])

p = bokeh_catplot.ecdf(

data=df_res,

val='c*',

x_axis_type='log',

x_axis_label='c* (1/days)',

)

bokeh.io.show(p)

Most samples are below 10, but we see 20% of them are well above 10, some positively enormous. This could be a solver issue, or it could be a lurking nonidentifiability. Let’s investigate.

Plotting the log likelihood function¶

To diagnose the issue, we will look at the likelihood function. To start with, we will fix the values of \(V_0\), \(\delta\), and \(\sigma_0\) to their MLEs and plot the likelihood function as a function of \(c\).

[11]:

# c values for plotting

c = np.linspace(0.01, 40, 300)

log_like = [log_likelihood(np.array([popt[0], c_val, *popt[-2:]]), t, V) for c_val in c]

like = np.exp(log_like - max(log_like))

p = bokeh.plotting.figure(

frame_height=250,

frame_width=450,

x_axis_label='c (1/days)',

y_axis_label='likelihood'

)

p.line(c, like, line_width=2)

bokeh.io.show(p)

This looks ok. Let’s take a close look, considering also \(\delta\), plotting the likelihood function in the \(c\)-\(\delta\) plane.

[12]:

# c and δ values for plotting

c = np.linspace(0.01, 100, 200)

delta = np.linspace(0.2, 0.8, 200)

# Compute log-likelihood for each value

log_like = np.empty((200, 200))

for j, c_val in enumerate(c):

for i, delta_val in enumerate(delta):

log_like[i, j] = log_likelihood(

np.array([popt[0], c_val, delta_val, popt[-1]]), t, V

)

like = np.exp(log_like - log_like.max())

# Display the image

hv.Image((c, delta, like), kdims=["c", "δ"]).opts(

cmap="viridis", frame_width=300, frame_height=300

)

[12]:

There is the nonidentifiability! The faint tail at \(\delta \approx 0.4\) days\(^{-1}\) goes off to infinity. If we have \(\delta \approx 0.4\), then any value of \(c\) will give roughly the same likelihood. From our estimates, we expect \(\delta\) to lie somewhere near 0.4 days\(^{-1}\), so this nonidentifiability is something we can likely encounter. It tells us that we cannot actually know \(c\) for sure, that is unless we had an independent measurement for \(\delta\). We would have no way of knowing this if we just solved the least squares problem and reported our values.

Computing the predictive regression and the confidence interval of the MLEs is the minimum you should do with your model. Next term, we will discuss principled modeling techniques that will help protect you from these pathologies that are encountered a lot more than we know.

Computing environment¶

[13]:

%load_ext watermark

%watermark -v -p numpy,pandas,scipy,numba,bokeh,bokeh_catplot,bebi103,holoviews,jupyterlab

CPython 3.7.5

IPython 7.1.1

numpy 1.17.4

pandas 0.24.2

scipy 1.3.1

numba 0.46.0

bokeh 1.4.0

bokeh_catplot 0.1.7

bebi103 0.0.46

holoviews 1.12.7

jupyterlab 1.2.3