BE/Bi 103, Fall 2017: Homework 9¶

Due 1pm, Wednesday, December 6¶

(c) 2017 Justin Bois. This work is licensed under a Creative Commons Attribution License CC-BY 4.0. All code contained therein is licensed under an MIT license.

This document was generated from a Jupyter notebook. You can download the notebook here.

This homework must be submitted as a Jupyter notebook and as an HTML file with complete results. It may be turned in no later than 1 pm on December 8 (maximum of two grace days allowed).

Problem 9.1: Constructing a hierarchical model, 10 pts¶

Consider the following set of experiments. A researcher measures the length of eggs laid by C. elegans worm j. We denote by xij the length of the ith egg from worm j. The researcher measures a total of nj eggs from worm j. She repeats this experiment for k total worms. Build a reasonable hierarchical model for this experiment.

Problem 9.2: Caulobacter growth, 90 pts¶

In this problem, we will study the growth and division of Caulobacter crescentus over time. The lab of Norbert Scherer at the University of Chicago acquired these data and published the work in PNAS, which you can download here.

The clever experimental set-up allows imaging of single dividing cells in conditions that are identical through time. This is accomplished by taking advantage of a unique morphological feature of Caulobacter. The mother cell is adherent to the a surface through its stalk. Upon division, one of the daughter cells does not have a stalk and is mobile. The system is part of a microfluidic device that gives a constant flow. So, every time a mother cell divides, the un-stalked daughter cell gets washed away. In such a way, the dividing cells are never in a crowded environment and the buffer is always fresh. This also allows for easier segmentation.

The data are available here. They were kindly provided by Charlie Wright in the Scherer lab. The frame rate is 1 frame per minute. The interpixel spacing is 0.052 µm. All images were acquired at 24 $^\circ$C.

As with any of the problems in this class, you are encouraged to explore the data beyond what is asked for specifically in the problems statements. The paper is also a very interesting read.

a) The directory sequential_full_field contains 10 sequential frames of a full field of bacteria in the microfluidic device. From these frames, choose which bacteria would be good to use to gather long time course imaging. I.e., choose those that you think will give minimal errors in segmentation. Give your reasoning, including images demonstrating segmentation.

b) The files bacterium_1.tif and bacterium_2.tif are TIFF stacks of time courses for specific cells in from the full field images. From these time courses, compute the time scale of division of the cells. In other words, compute the time between divisions for each division for each cell and plot the results. Comment on any interesting aspects of these data.

c) We know that under ideal conditions, bacterial cells experience exponential growth in bulk. That is, the number of cells grows exponentially. This is possible regardless of how individual cells growth; the repeated divisions leads to exponential growth. In their paper, the authors argue that the growth rate of each cell is also exponential. I.e.,

\begin{align} a(t) = a_0 \mathrm{e}^{\kappa t}, \end{align}

where $a(t)$ is the area of the cell in the image as a function of time and $a_0$ is the area of the cell right after a division has been completed, which we mark as $t = 0$.

As an alternative model, the authors consider a linear growth model, in which

\begin{align} a(t) = a_0 + b t. \end{align}

An exponential curve is approximately linear (with $b = a_0\kappa$) for short time scales. So, it is often difficult to distinguish between a linear and an exponential growth rate. Use the model comparison methods we learned in class to assess the relative probabilities that the growth rate is exponential versus linear. You should use hierarchical models.

Since you are using a hierarchical model, here are a few tips for building and implementing the models. You do not need to take this advice if you do not want to, but I have found that these strategies help.

- When building the model, I typically use weakly informative priors. I do not use Jeffreys priors for scale hyperparameters for reasons related to improper posteriors, as we discussed in Lecture 9.

- Think carefully about your hyperpriors. If you choose an uninformative hyperprior for a level of the model that is data poor, you end up underpooling. For example, in this problem, there are only two mother cells. So, there are only two Caulobacter samples in your data set. If you put a broad prior on the growth rate of Caulobacter cells, these two cells can be effectively decoupled. Even a Cauchy hyperprior can be too broad, and Normal priors might be a better choice.

- The hierarchical structure can make things difficult for samplers. As I'm building my hierarchical model, often approach it with "baby steps." I like to start off with a non-hierarchical model, often with a subset of the data. I perform sampling on this simpler model, taking a small number of samples so I do not have to wait for too long. After making sure everything is ok with this simpler structure, I then add a level to the hierarchy. I again do the sampling with a subset of the data, make sure everything works ok, and then add the next level of hierarchy, and so on. I find this helps me find bugs and little details along the way.

- Use uncentered priors to avoid the funnel of hell.

- When you sample out of the full hierarchical model, the sampler may be slower than you are used to seeing. It will likely also be much slower than sampling out of the non-hierarchical model, even though there are only a few more parameters. NUTS may also be particularly slow during the tuning phase. You may see it progress taking a few seconds per iteration. This is natural.

- In PyMC3, be careful with how you initialize your samplers. You may have hard bounds on certain parameters, such as scale hyperparameters, and using the default

init=jitter+adapt_diaginpm.sample()can place a walker in an illegal places, leading to the dreaded "ValueError: Bad initial energy: nan. The model might be misspecified." error. You might instead considerinit=advi+adapt_diag. In practice, I found thatinit=adapt_diagworks just fine. You might also want to provide goodtestvals for your variables.

Finally, just to give you a sense of what kind of computation time you might expect, I took 5000 samples with 5000 tuning steps from a three-level hierarchical exponential growth model on each of four cores with my MacBook Pro in about 6.5 hours. The hierarchical linear model was a bit fast, at about four hours. If you are going do a calculation of the Bayesian odds ratio where you need to sample at different temperatures, you should use AWS or similar computing resource.

If you choose to use the MCMC Hammer, which performs quite well on this problem, you need to be careful about RAM. You will need at least 600 walkers, so for the sake of this discussion, let's say we have 1000 walkers. If you took 2000 samples at 20 temperatures, you have 4 million samples. Each sample has about 200 parameters, giving 9 billion numbers we have to store. Each number if a 64 bit float, totally 70 GB of memory. So, if you do implement this using the MCMC Hammer, you will either need to pay for a big machine on AWS or related service, or think about which samples you will store as you go along with the calculation.

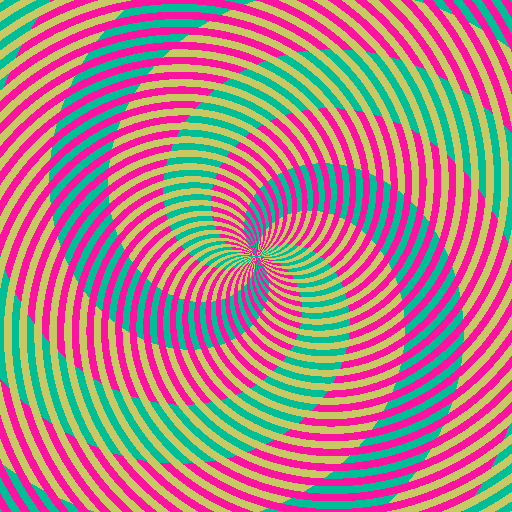

Problem 9.3: Your eyes are not as good as your computer, 5 pts extra credit¶

Download the image below. Use the basic image processing tools we learned in class to replace the beige and magenta colors with white and save the resulting image. Display the original image and the altered image side by side. (This problem was inspired by Dan White.)