Display of MCMC samples

[2]:

import numpy as np

import pandas as pd

import cmdstanpy

import arviz as az

import iqplot

import bebi103

import holoviews as hv

hv.extension('bokeh')

bebi103.hv.set_defaults()

import bokeh.io

bokeh.io.output_notebook()

/Users/bois/opt/anaconda3/lib/python3.9/site-packages/colorcet/__init__.py:74: UserWarning: Trying to register the cmap 'cet_gray' which already exists.

register_cmap("cet_"+name, cmap=cm[name])

/Users/bois/opt/anaconda3/lib/python3.9/site-packages/colorcet/__init__.py:74: UserWarning: Trying to register the cmap 'cet_gray_r' which already exists.

register_cmap("cet_"+name, cmap=cm[name])

In a previous lesson, we learned how to use Stan to sample out of posterior distributions specified by a generative model. In this lecture, we will learn about some techniques to visualize the results. As our first working example, we will consider again the conserved tubulin spindle size model, discussed at length previously, using the data set from Good, et al., Science, 342, 856-860, 2013.

We is a quick look at the data set.

[3]:

df = pd.read_csv(os.path.join(data_path, "good_invitro_droplet_data.csv"), comment="#")

hv.Scatter(

data=df,

kdims="Droplet Diameter (um)",

vdims="Spindle Length (um)",

)

[3]:

Visualization with ArviZ

ArviZ has lots of handy visualization of MCMC results, and it is a very promising package to use to quickly get plots. It currently still has some drawbacks. First, some plots are not rendered properly with Bokeh. This problem will likely be fixed very soon with future releases. Second, some of the aesthetic choices in the plots would not be my first choice. There are other specific drawbacks to the ArviZ visualizations that I will point out as we go along.

For each of the visualizations below, I show how to construct the visualization using ArviZ, but also using the bebi103 package.

The model and samples

We will briefly repeat the sampling of the model as we did in a previous lesson. As a reminder, the model is

\begin{align} &\log_{10} \phi \sim \text{Norm}(1.5, 0.75),\\[1em] &\gamma \sim \text{Beta}(1.1, 1.1), \\[1em] &\sigma \sim \text{HalfNorm}(10),\\[1em] &\mu_i = \frac{\gamma d_i}{\left(1+(\gamma d_i/\phi)^3\right)^{\frac{1}{3}}}, \\[1em] &l_i \mid d_i, \gamma, \phi, \sigma \sim \text{Norm}(\mu_i, \sigma) \;\forall i. \end{align}

We used the following Stan code to implement this model.

functions {

real ell_theor(real d, real phi, real gamma_) {

real denom_ratio = (gamma_ * d / phi)^3;

return gamma_ * d / (1 + denom_ratio)^(1.0 / 3.0);

}

}

data {

int N;

real d[N];

real ell[N];

}

parameters {

real log10_phi;

real gamma_;

real<lower=0> sigma;

}

transformed parameters {

real phi = 10^log10_phi;

}

model {

log10_phi ~ normal(1.5, 0.75);

gamma_ ~ beta(1.1, 1.1);

sigma ~ normal(0.0, 10.0);

for (i in 1:N) {

ell[i] ~ normal(ell_theor(d[i], phi, gamma_), sigma);

}

}

Let’s obtain some samples so we can start to look at visualizations.

[4]:

sm = cmdstanpy.CmdStanModel(stan_file="spindle.stan")

data = dict(

N=len(df),

d=df["Droplet Diameter (um)"].values,

ell=df["Spindle Length (um)"].values,

)

samples = sm.sample(

data=data,

chains=4,

iter_sampling=1000,

)

samples = az.from_cmdstanpy(posterior=samples)

INFO:cmdstanpy:found newer exe file, not recompiling

INFO:cmdstanpy:CmdStan start procesing

INFO:cmdstanpy:CmdStan done processing.

[5]:

samples

[5]:

-

- chain: 4

- draw: 1000

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 5 ... 995 996 997 998 999

array([ 0, 1, 2, ..., 997, 998, 999])

- log10_phi(chain, draw)float641.581 1.589 1.584 ... 1.585 1.579

array([[1.5815 , 1.5892 , 1.58412, ..., 1.57281, 1.58016, 1.58261], [1.57514, 1.59043, 1.58852, ..., 1.57947, 1.58076, 1.58076], [1.58263, 1.58 , 1.58301, ..., 1.57957, 1.58123, 1.58162], [1.57953, 1.57965, 1.58053, ..., 1.57927, 1.58528, 1.57869]]) - gamma_(chain, draw)float640.862 0.8246 ... 0.8667 0.8619

array([[0.862005, 0.824594, 0.844581, ..., 0.889099, 0.885609, 0.866626], [0.884042, 0.838895, 0.838713, ..., 0.868685, 0.872152, 0.872152], [0.87174 , 0.875013, 0.882629, ..., 0.867965, 0.869297, 0.883977], [0.894633, 0.87788 , 0.877312, ..., 0.870967, 0.866704, 0.861875]]) - sigma(chain, draw)float644.048 3.897 3.971 ... 3.694 3.713

array([[4.04816, 3.89717, 3.97066, ..., 3.5725 , 3.9524 , 3.83485], [3.75434, 3.89937, 3.89257, ..., 3.72585, 3.69928, 3.69928], [3.69518, 3.87492, 3.85387, ..., 3.74508, 3.81783, 3.85262], [3.74873, 3.97718, 3.98385, ..., 3.83503, 3.69432, 3.71259]]) - phi(chain, draw)float6438.15 38.83 38.38 ... 38.48 37.9

array([[38.1501, 38.8325, 38.3812, ..., 37.3944, 38.0326, 38.2481], [37.5958, 38.9432, 38.7722, ..., 37.9725, 38.0855, 38.0855], [38.2499, 38.0189, 38.2833, ..., 37.9816, 38.127 , 38.1614], [37.978 , 37.9879, 38.0657, ..., 37.955 , 38.4839, 37.9045]])

- created_at :

- 2022-01-26T17:57:31.461560

- arviz_version :

- 0.11.4

- inference_library :

- cmdstanpy

- inference_library_version :

- 1.0.0

<xarray.Dataset> Dimensions: (chain: 4, draw: 1000) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 992 993 994 995 996 997 998 999 Data variables: log10_phi (chain, draw) float64 1.581 1.589 1.584 ... 1.579 1.585 1.579 gamma_ (chain, draw) float64 0.862 0.8246 0.8446 ... 0.871 0.8667 0.8619 sigma (chain, draw) float64 4.048 3.897 3.971 ... 3.835 3.694 3.713 phi (chain, draw) float64 38.15 38.83 38.38 ... 37.95 38.48 37.9 Attributes: created_at: 2022-01-26T17:57:31.461560 arviz_version: 0.11.4 inference_library: cmdstanpy inference_library_version: 1.0.0xarray.Dataset -

- chain: 4

- draw: 1000

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 5 ... 995 996 997 998 999

array([ 0, 1, 2, ..., 997, 998, 999])

- lp(chain, draw)float64-1.224e+03 ... -1.221e+03

array([[-1224.01, -1223.54, -1222.94, ..., -1225.04, -1223.37, -1220.89], [-1221.9 , -1222.73, -1222.11, ..., -1220.75, -1220.86, -1220.86], [-1221.23, -1221.4 , -1223.11, ..., -1220.69, -1220.75, -1222.55], [-1223.06, -1222.98, -1223.16, ..., -1220.99, -1221.82, -1221.4 ]]) - accept_stat(chain, draw)float640.9681 0.9316 ... 0.843 0.7769

array([[0.968132, 0.931646, 0.739429, ..., 0.955433, 0.996946, 0.995599], [0.990999, 0.985984, 1. , ..., 0.968832, 0.977852, 0.601503], [0.993392, 0.888413, 0.712847, ..., 0.982011, 0.99834 , 0.835473], [0.912357, 0.733236, 0.995575, ..., 0.977736, 0.843034, 0.776882]]) - stepsize(chain, draw)float640.4268 0.4268 ... 0.3972 0.3972

array([[0.4268 , 0.4268 , 0.4268 , ..., 0.4268 , 0.4268 , 0.4268 ], [0.368139, 0.368139, 0.368139, ..., 0.368139, 0.368139, 0.368139], [0.398095, 0.398095, 0.398095, ..., 0.398095, 0.398095, 0.398095], [0.397194, 0.397194, 0.397194, ..., 0.397194, 0.397194, 0.397194]]) - treedepth(chain, draw)int643 3 4 2 3 1 3 2 ... 3 2 4 2 3 3 3 2

array([[3, 3, 4, ..., 3, 3, 4], [3, 4, 2, ..., 2, 3, 2], [2, 4, 2, ..., 2, 3, 2], [3, 3, 3, ..., 3, 3, 2]]) - n_leapfrog(chain, draw)int647 7 15 3 7 1 7 5 ... 7 15 3 7 7 7 7

array([[ 7, 7, 15, ..., 7, 15, 15], [11, 15, 3, ..., 7, 7, 3], [ 7, 15, 3, ..., 7, 7, 7], [ 7, 15, 7, ..., 7, 7, 7]]) - diverging(chain, draw)boolFalse False False ... False False

array([[False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False]]) - energy(chain, draw)float641.229e+03 1.227e+03 ... 1.225e+03

array([[1228.69, 1226.82, 1226.54, ..., 1225.84, 1228.11, 1223.93], [1224.07, 1224.13, 1222.79, ..., 1224.14, 1221.27, 1224.75], [1223.97, 1223.82, 1223.71, ..., 1223.14, 1221.11, 1223.04], [1223.91, 1227.15, 1223.29, ..., 1222.16, 1222.44, 1224.75]])

- created_at :

- 2022-01-26T17:57:31.467302

- arviz_version :

- 0.11.4

- inference_library :

- cmdstanpy

- inference_library_version :

- 1.0.0

<xarray.Dataset> Dimensions: (chain: 4, draw: 1000) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 993 994 995 996 997 998 999 Data variables: lp (chain, draw) float64 -1.224e+03 -1.224e+03 ... -1.221e+03 accept_stat (chain, draw) float64 0.9681 0.9316 0.7394 ... 0.843 0.7769 stepsize (chain, draw) float64 0.4268 0.4268 0.4268 ... 0.3972 0.3972 treedepth (chain, draw) int64 3 3 4 2 3 1 3 2 2 3 ... 4 3 3 2 4 2 3 3 3 2 n_leapfrog (chain, draw) int64 7 7 15 3 7 1 7 5 7 ... 11 7 7 15 3 7 7 7 7 diverging (chain, draw) bool False False False ... False False False energy (chain, draw) float64 1.229e+03 1.227e+03 ... 1.225e+03 Attributes: created_at: 2022-01-26T17:57:31.467302 arviz_version: 0.11.4 inference_library: cmdstanpy inference_library_version: 1.0.0xarray.Dataset

Examining traces

The first type of visualization we will explore is useful for diagnosing potential problems with the sampler.

Trace plots

Trace plots are a common way of visualizing the trajectory a sampler takes through parameter space. We plot the value of the parameter versus step number. You can make these plots using bebi103.viz.trace_plot(). By default, the traces are colored according to chain number. In our sampling we just did, we used Stan’s default of four chains.

Trace plots with ArviZ

To make plots with ArviZ, the basic syntax is az.plot_***(samples, backend='bokeh'), where *** is the kind of plot you want. The backend="bokeh" kwarg indicates that you want the plot rendered with Bokeh. Let’s make a trace plot.

[6]:

az.plot_trace(samples, backend="bokeh");

ArviZ generates two plots for each variable. The plots to the right show the trace of the sampler. In this plot, the x-axis is the iteration number of the steps of the walker and the y-axis is the values of the parameter for that step.

ArviZ also plots a picture of the marginalized posterior distribution for each trace to the left. The issue I have these plots because they use kernel density estimation for the posterior plot. KDE involves selecting a bandwidth, which leaves an adjustable parameter. I prefer simply to plot the ECDFs of the samples to visualize the marginal distributions, as I show below.

Trace plots with bebi103

To make trace plots with the bebi103 module, you can do the following.

[7]:

bokeh.io.show(

bebi103.viz.trace(samples)

)

Interpetation of trace plots

These trace plots look pretty good; the sampler is bounding around a central value. Trace plots are very commonly used, which is why I present them here, but are not particularly useful. This is not just my opinion. Here is what Dan Simpson has to say about trace plots.

Parallel coordinate plots

As Dan Simpson pointed out in his talk I linked to above, plots that visualize diagnostics effectively are better. We will talk more about diagnostics of MCMC in later lessons, but for now, I will display one of the diagnostic plots Dan showed in his talk. Here, we put the parameter names on the x-axis, and we plot the values of the parameters on the y-axis. Each line in the plot represents a single sample of the set of parameters.

Parallel coordinate plots with ArviZ

[8]:

az.plot_parallel(samples, backend="bokeh");

To make sure we compare things of the same magnitude so we can better see the details of how the parameters vary with respect to each other, we scale the samples by the minimum and maximum values.

[9]:

az.plot_parallel(

samples, var_names=["phi", "gamma_", "sigma"], backend="bokeh", norm_method="minmax"

);

Parallel coordinate plots with bebi103

[10]:

bokeh.io.show(

bebi103.viz.parcoord(

samples,

transformation="minmax",

parameters=["phi", "gamma_", "sigma"],

)

)

Intepretation of parallel coordinate plots

Normally, samples with problems are plotted in another color to help diagnose the problems. In this particular set of samples, there were no problems, so everything looks fine.

Interestingly, the “neck” between phi and gamma_ is indicative of anticorrelation. When φ is high, γ is low, and vice-versa.

Plots of marginalized distributions

While we cannot in general plot a multidimensional distribution, we can plot marginalized distributions, either of one parameter or of two.

Plotting marginalized distributions of one parameter

There are three main options for plotting marginalized distributions of a single parameter.

A kernel density estimate of the marginalized posterior.

A histogram of the marginalized posterior.

An ECDF of the samples out of the posterior for a particular parameter. This approximates the CDF of the marginalized distribution.

I prefer (3), though (2) is a good option as well. For continuous parameters, ArviZ offers only a KDE plot.

Plotting marginalized distributions with ArviZ

We already saw that ArviZ can give these plots along with trace plots. We can also directly plot them using the az.plot_density(). When using this function, the distributions are truncated at the bound of the highest probability density region, or HPD. If we’re considering a 95% credible interval, the HPD interval is the shortest interval that contains 95% of the probability of the posterior. By default, az.plot_density() truncates the plot of the KDE of the PDF for a 94% HPD.

[11]:

az.plot_density(

samples, backend="bokeh", backend_kwargs=dict(frame_width=200, frame_height=150)

);

Plotting marginalized distributions with iqplot

To get samples out of the marginalized posterior for a single parameter, we simply ignore the values of the parameters that are not the one of interest. We can then use iqplot to make plots of histograms or ECDFs.

To do so, we need to convert the posterior samples to a tidy data frame.

[12]:

# Convert to data frame

df_mcmc = bebi103.stan.arviz_to_dataframe(samples)

# Take a look

df_mcmc.head()

[12]:

| log10_phi | gamma_ | sigma | phi | chain__ | draw__ | diverging__ | |

|---|---|---|---|---|---|---|---|

| 0 | 1.58150 | 0.862005 | 4.04816 | 38.1501 | 0 | 0 | False |

| 1 | 1.58920 | 0.824594 | 3.89717 | 38.8325 | 0 | 1 | False |

| 2 | 1.58412 | 0.844581 | 3.97066 | 38.3812 | 0 | 2 | False |

| 3 | 1.58683 | 0.850322 | 3.89043 | 38.6218 | 0 | 3 | False |

| 4 | 1.58052 | 0.858429 | 3.55571 | 38.0649 | 0 | 4 | False |

Now, we can use iqplot to make histograms.

[13]:

hists = [

iqplot.histogram(

df_mcmc, q=param, density=True, rug=False, frame_height=150

)

for param in ["phi", "gamma_", "sigma"]

]

bokeh.io.show(bokeh.layouts.gridplot(hists, ncols=1))

A better option, in my opinion, is to make ECDFs of the samples.

[14]:

ecdfs = [

iqplot.ecdf(

df_mcmc, q=param, style="staircase", frame_height=150

)

for param in ["phi", "gamma_", "sigma"]

]

bokeh.io.show(bokeh.layouts.gridplot(ecdfs, ncols=1))

Marginal posteriors of two parameters and corner plots

We can also plot the two-dimensional distribution (of most interest here are the parameters \(\phi\) and \(\gamma\)). The simplest way to plot these is simple to plot each point, possibly with some transparency.

[15]:

hv.Points(df_mcmc, kdims=[("phi", "ϕ [µm]"), ("gamma_", "γ")],).opts(

alpha=0.1,

size=2,

)

[15]:

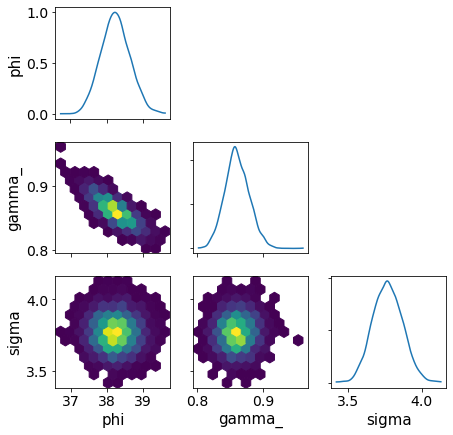

Pair plots with ArviZ

We might like to do this for all pairs of plots. ArviZ enables this to be done conveniently.

[16]:

az.plot_pair(

samples,

var_names=["phi", "gamma_", "sigma"],

scatter_kwargs=dict(fill_alpha=0.1),

backend="bokeh",

backend_kwargs=dict(frame_width=200, frame_height=200),

);

By adding the marginals=True kwarg, the pair plots now also include representation of the univariate marginal distributions.

[17]:

az.plot_pair(

samples,

var_names=["phi", "gamma_", "sigma"],

marginals=True,

backend="bokeh",

backend_kwargs=dict(frame_width=200, frame_height=200),

);

A plot like this is often called a corner plot. It shows all bi- and uni-variate marginal distributions.

ArviZ also offers pair two-dimensional binning in the form of a hex plot. (This does not work properly with a Bokeh backend, so we use a Matplotlib backend.)

[18]:

az.plot_pair(

samples,

var_names=["phi", "gamma_", "sigma"],

kind="hexbin",

marginals=True,

backend="matplotlib",

figsize=(7, 7),

);

Corner plots with bebi103

Corner plots are also implemented in the bebi103 package.

[19]:

bokeh.io.show(

bebi103.viz.corner(

samples,

parameters=[("phi", "ϕ [µm]"), ("gamma_", "γ"), ("sigma", "σ [µm]")],

xtick_label_orientation=np.pi / 4,

)

)

This is a nice way to summarize the posterior and is useful for visualizing how various parameters covary. We can also do a corner plot with the one-parameter marginalized posteriors represented as CDFs.

[20]:

bokeh.io.show(

bebi103.viz.corner(

samples,

parameters=[("phi", "ϕ [µm]"), ("gamma_", "γ"), ("sigma", "σ [µm]")],

plot_ecdf=True,

xtick_label_orientation=np.pi / 4,

)

)

Corner plots are my preferred method of displaying results. All possible display-able marginal posteriors are plotted and laid out in a logical way.

[21]:

bebi103.stan.clean_cmdstan()

Computing environment

[22]:

%load_ext watermark

%watermark -v -p numpy,pandas,cmdstanpy,arviz,bokeh,holoviews,iqplot,bebi103,jupyterlab

print("cmdstan :", bebi103.stan.cmdstan_version())

Python implementation: CPython

Python version : 3.9.7

IPython version : 7.29.0

numpy : 1.20.3

pandas : 1.3.5

cmdstanpy : 1.0.0

arviz : 0.11.4

bokeh : 2.3.3

holoviews : 1.14.6

iqplot : 0.2.4

bebi103 : 0.1.10

jupyterlab: 3.2.1

cmdstan : 2.28.2